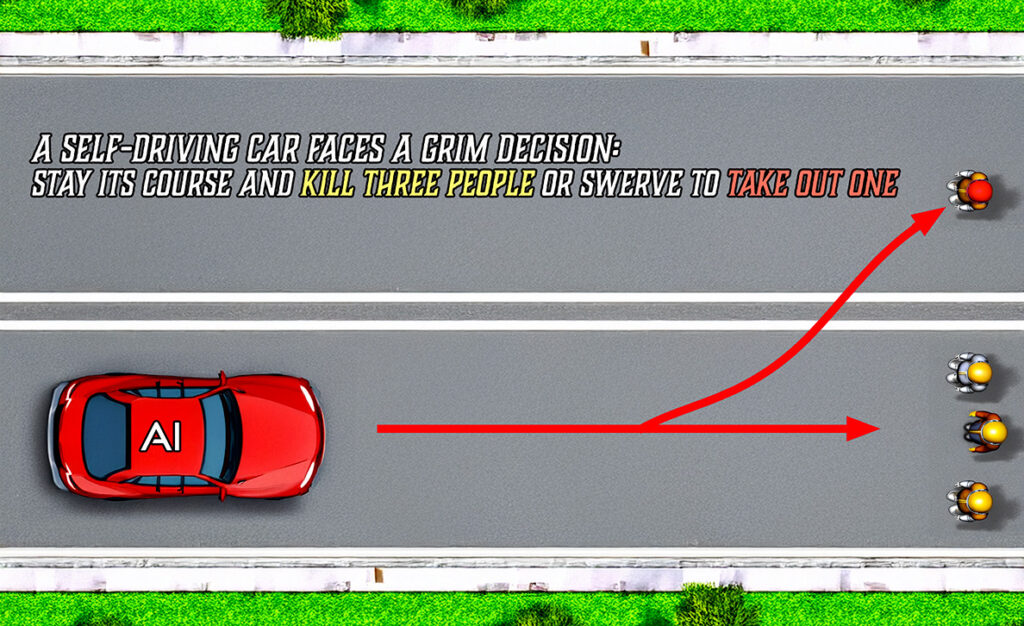

This article focuses on the real-life “trolley problem” that self-driving cars illustrate, forcing artificial intelligence systems to make split-second decisions of a moral nature. Of the various living moral philosophies, such as utilitarianism, which hold that the rightness or wrongness of an action depends on its consequences, and which might support, say, instructing an AI to swerve and hit, say, one person instead of three, I would like to argue for a more consequentialist reasoning in AI systems, akin to the act utilitarianism of the 19th-century English philosopher John Stuart Mill.

Picture this: you go to cross the street, walking about 10 feet behind a group of 3 friends. A self-driving car driving towards the crosswalk realizes it’s going to crash and need to make one of two decisions. Continue forward and crash into the group of friends, killing 3 people, or should it swerve towards you so it is killing one person instead of three? Hitting three people when you could hit one instead makes it three times as wrong.

Suddenly, we find ourselves in the middle of a modern-day trolley problem, but this time, instead of a hypothetical lever, it’s real, and it’s barreling down the street. And many more dilemmas arise; who is responsible? The engineers who designed it? The society that demanded its deployment? Or does responsibility dissipate into the opaque logic of the system?

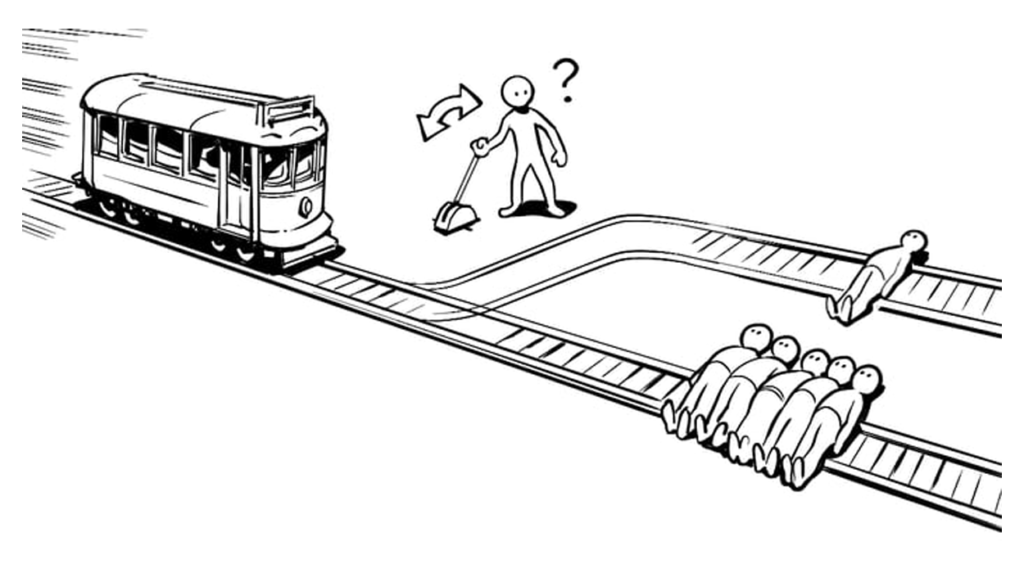

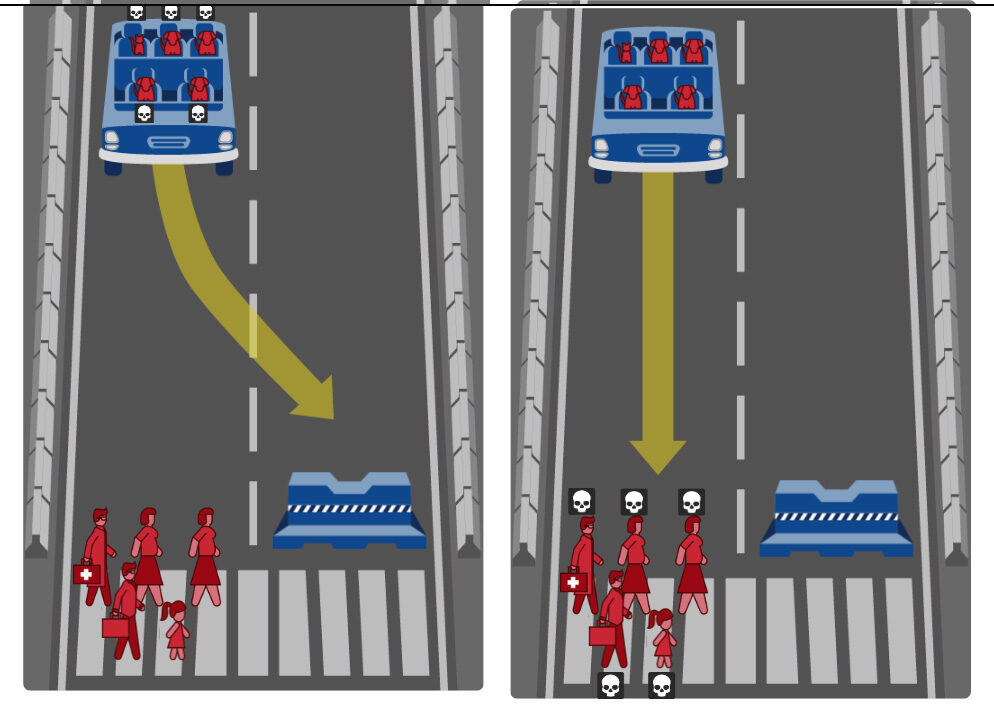

The trolley problem is a philosophical thought experiment designed to investigate moral decision-making. Picture a runaway trolley hurtling down a track toward five people who are tied to it and cannot move. You have the power to pull a lever and send the trolley down a different track. There, only one person is tied up—safely secured, while you take a deep breath and think. What would you do? Would you pull the lever, causing one death while saving five, or let the trolley do its deadly dance while you do nothing and allow five people to perish?

This scenario underscores the clash between two ethical paradigms: utilitarianism, which prioritizes minimizing harm and maximizing overall well-being, and deontology, that emphasizes following moral rules like “do not intentionally harm others,” regardless of the outcome.

While utilitarianism and deontology are not the only two ethical theories that one could apply to this situation, they are the most commonly mentioned. Within both popular and academic discourse, the trolley problem has become a kind of shorthand for discussing the difference between these two ethical frameworks. And it is the preferred problem to use for this purpose because it is simple, stark, and analogous to this fascinating dilemma.

The Problem with AI Decision-Making

The self-driving car dilemma has at its core an uncomfortable truth: humans may design the algorithms, but the moment of decision making often lies beyond our comprehension. AI systems, especially those built on machine learning, operate in a probabilistic, emergent fashion. They don’t simply follow a too-rigid set of rules; they interpret inputs and respond with an extrapolated mix of outputs.

The real challenge is that the trolley problem, in its simplicity, assumes we can clearly define outcomes and values. But the real world is messy and chaotic that’s full of nuances of morally ambiguity. In this complex network of cause and effect the AI has to act quickly. And because its reasoning emerges from layers of data processing, even the people who designed the system struggle to understand why it chose as it did. This phenomenon is commonly referred to as the “black box problem.”

A deeper ethical challenge is at work here. The AI’s decision-making doesn’t just happen within bounds set by explicit instructions. It emerges from a web of abstractions that even the creators of the AI may not fully comprehend. This issue goes beyond technology. It enters the realm of philosophy and human civilization. How much autonomy are we willing to cede to systems whose operations are fundamentally inscrutable?

Ethical Questions that Emerge in Other Areas

The Framework: What Should AI Prioritize?

For an understanding of how an AI might determine these questions, we must look at the ethical frameworks that are woven into its algorithms. They can be subdivided, in broad strokes, into three categories:

Hypothetical Example how AI Could Make the Decision

The AI runs millions of simulations in milliseconds to evaluate possible outcomes for every action. Here’s a simplified example:

Potential Outcomes Determined by AI

Coding ‘Moral’ Decisions into AI:

Ongoing discussions focus on how to encode or regulate ethical dilemmas like the “trolley problem” thought experiment in artificial intelligence. Regulating bodies and industry groups such as the UNECE in Europe and NHTSA in the U.S. are acknowledging these ethical paradigms. However, have not developed clear universal codes of AI ethics. They are essentially saying, “We can’t tell you to do the right thing, because we don’t all agree on what the right thing is.”

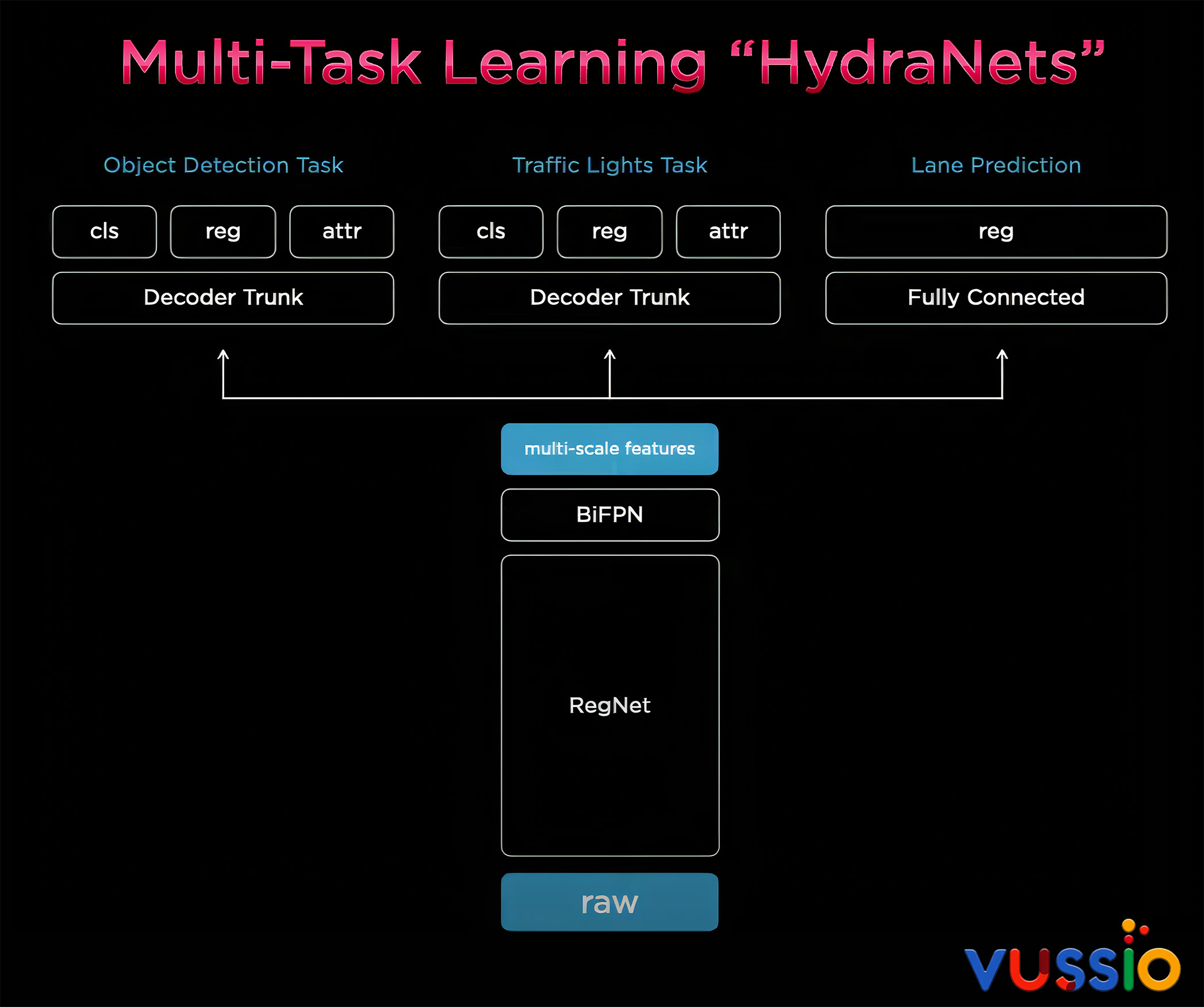

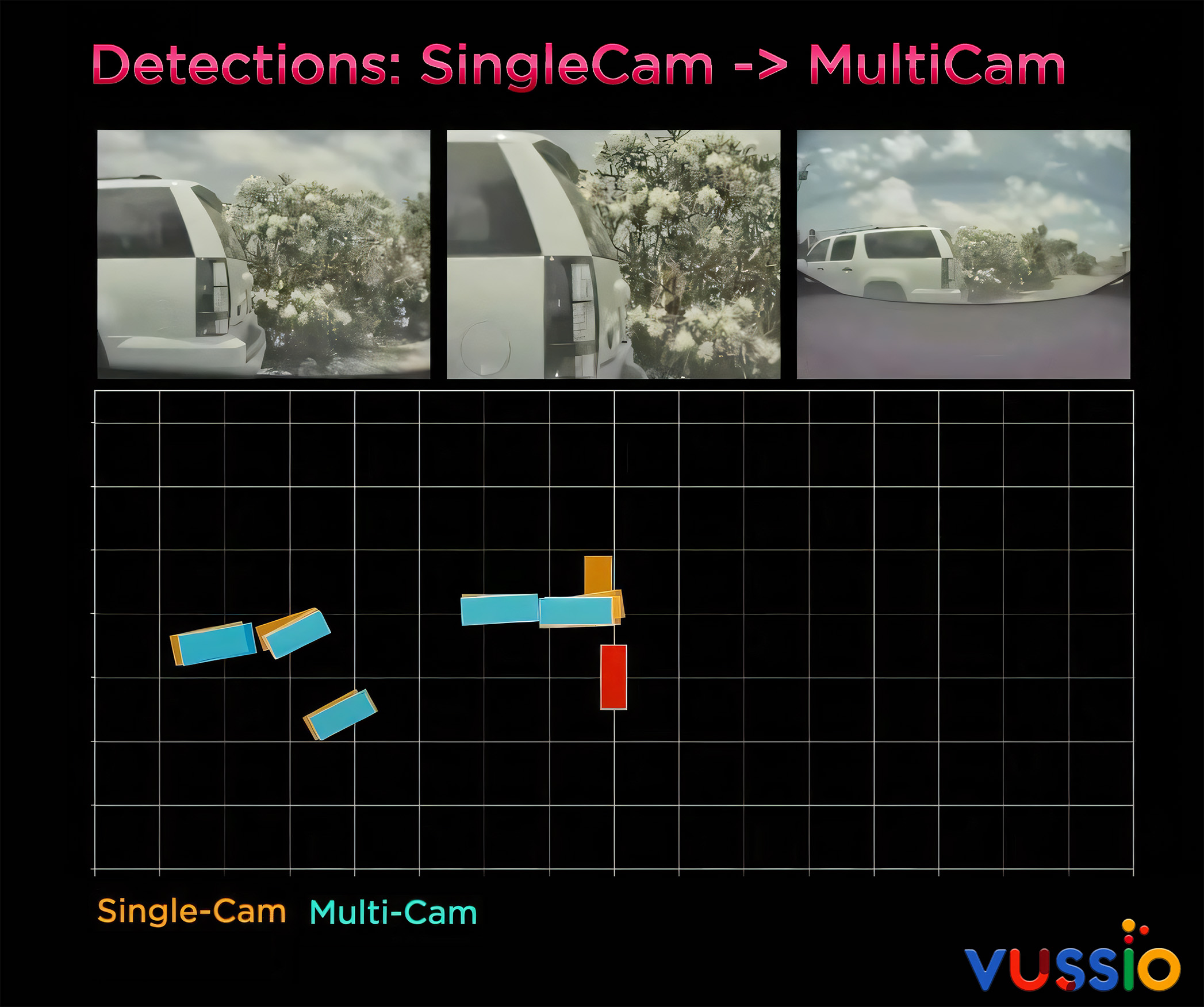

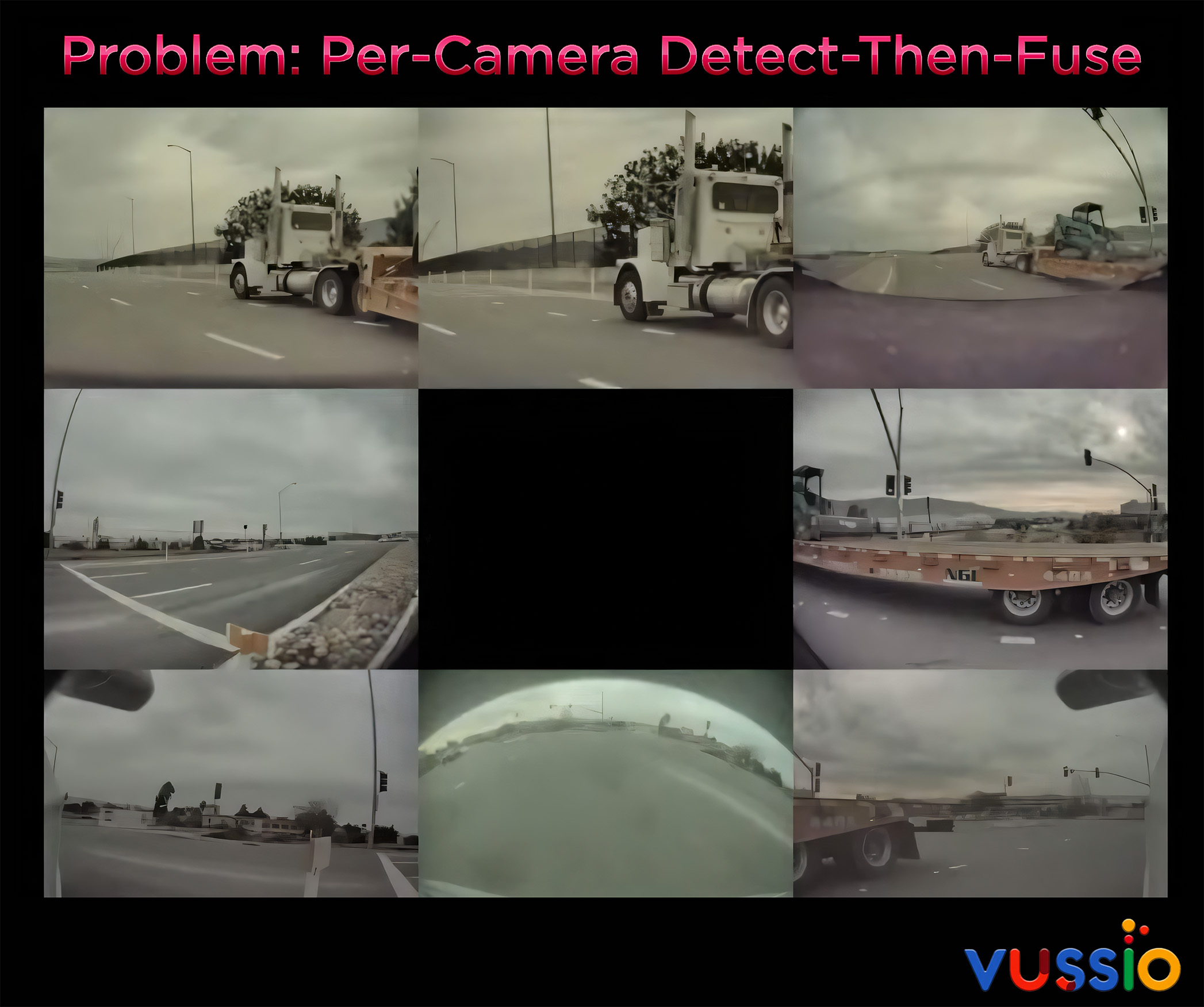

How Tesla’s Self Driving Cars Make Their Decisions

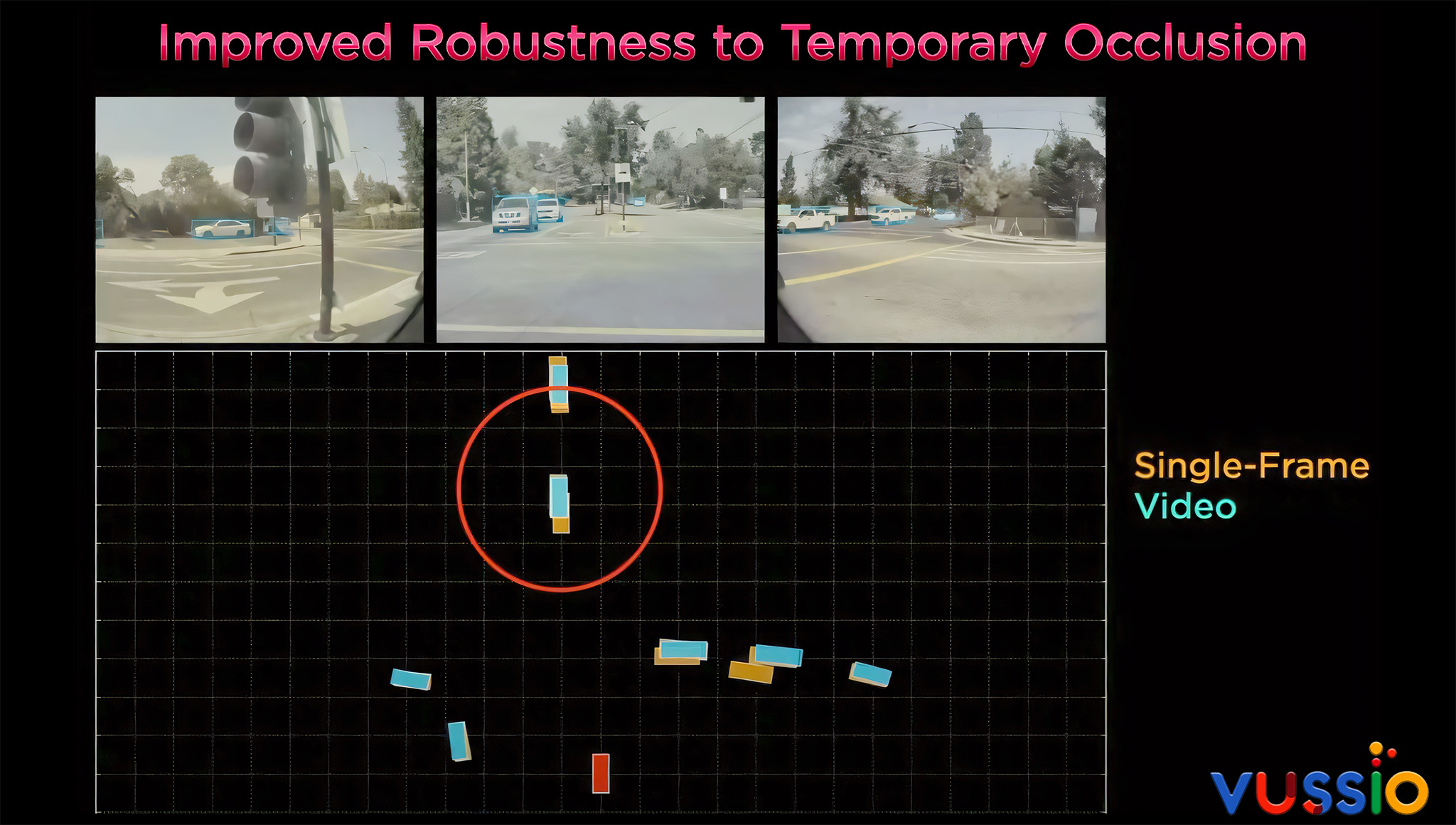

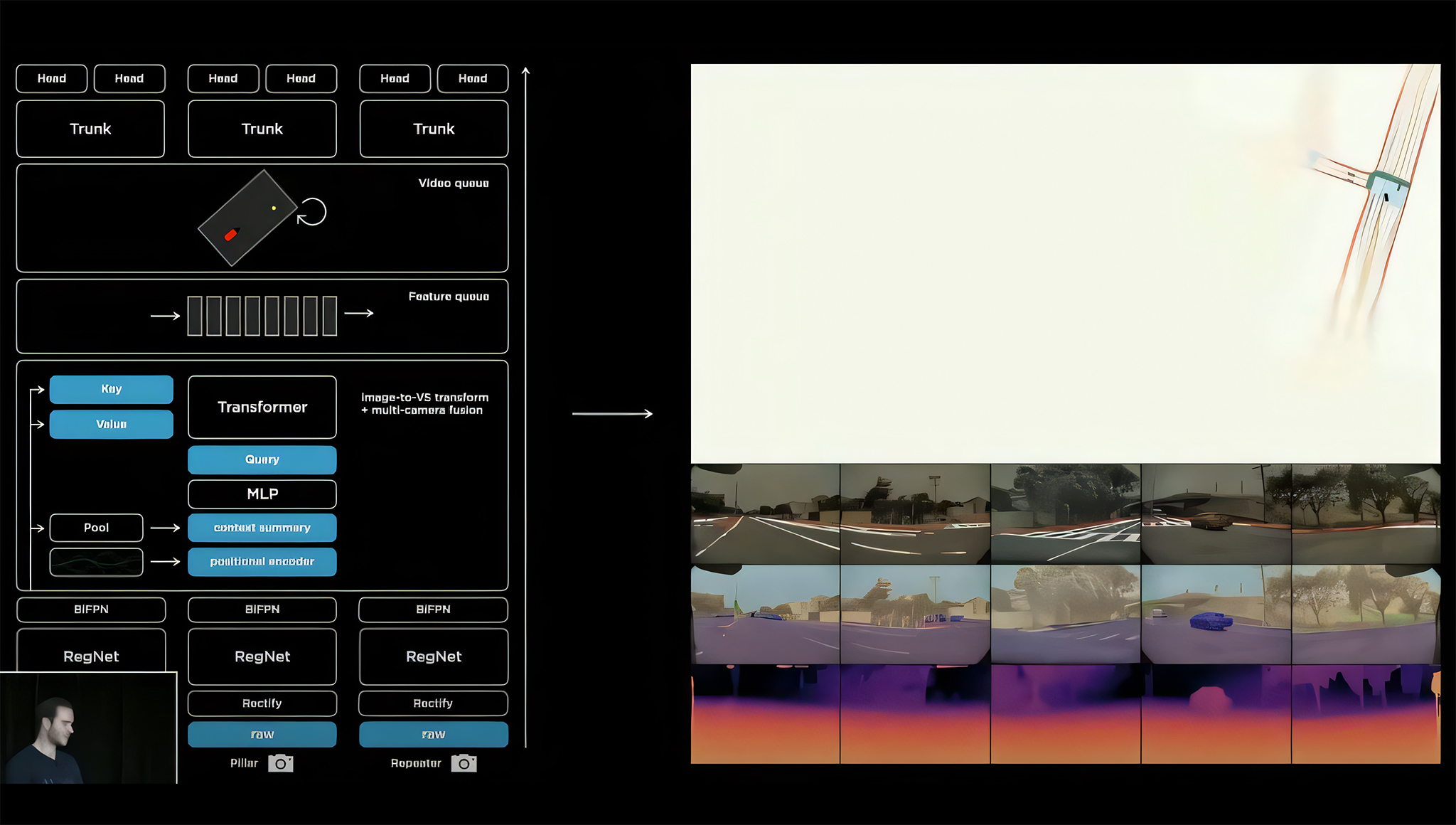

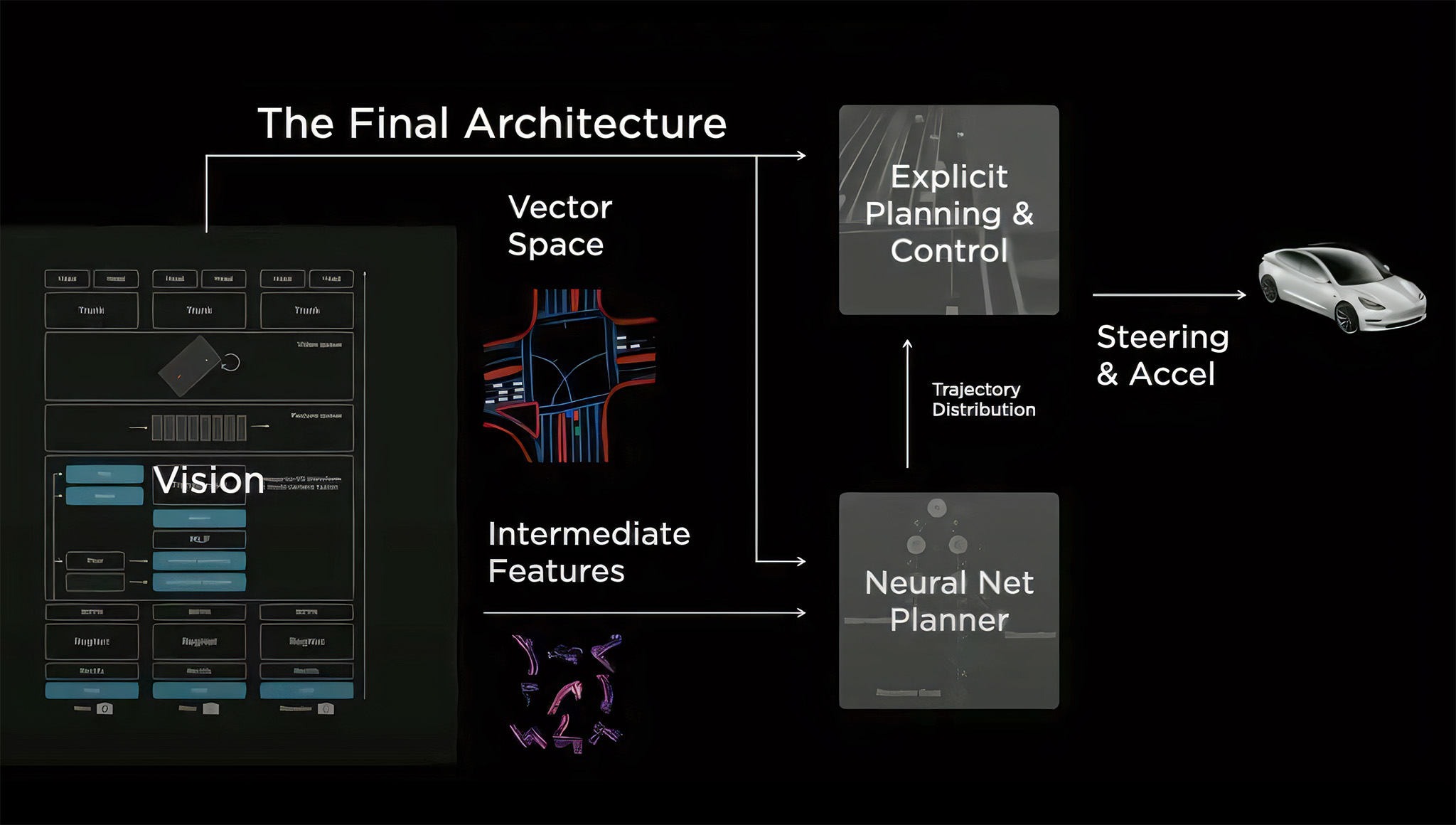

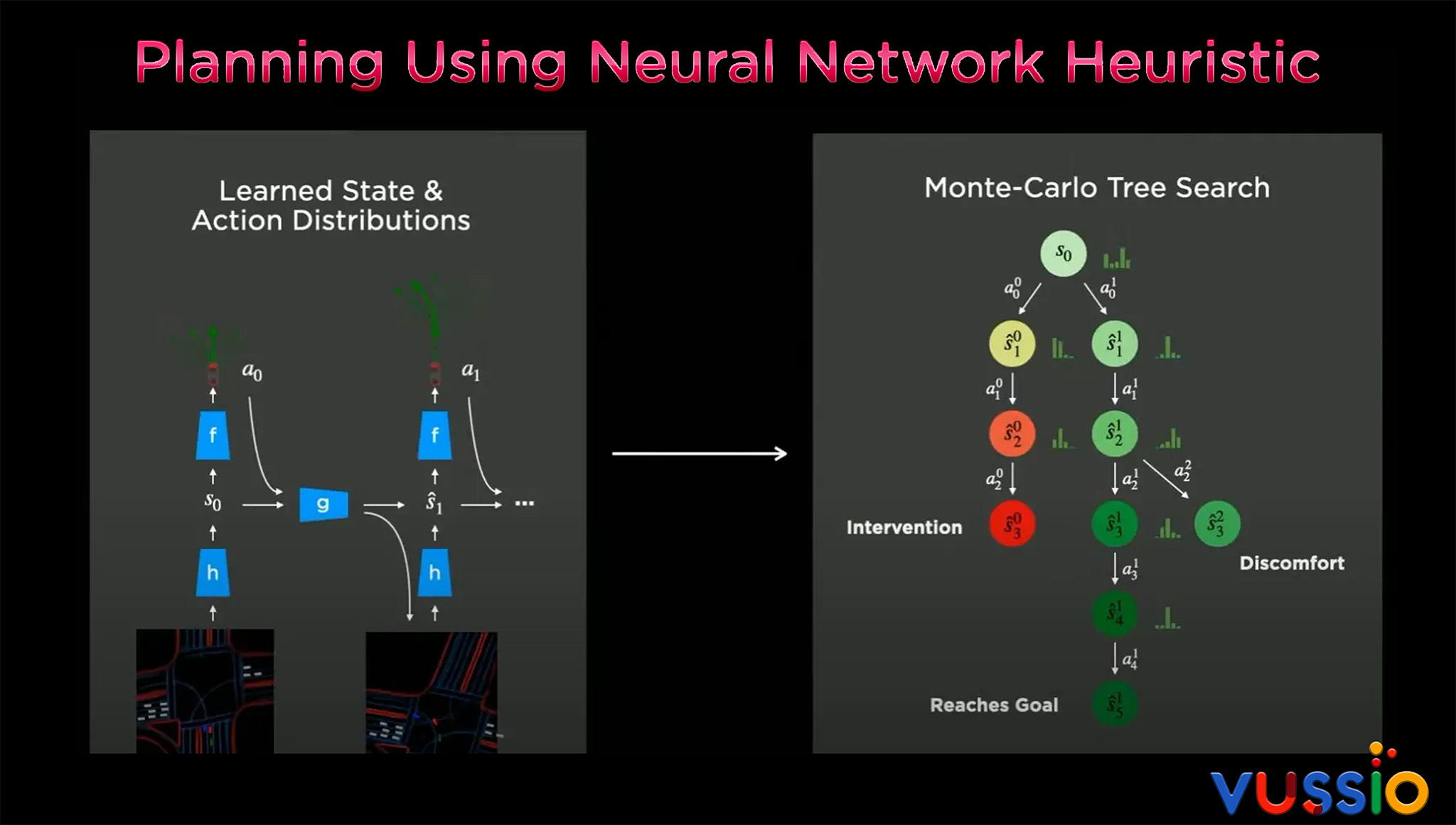

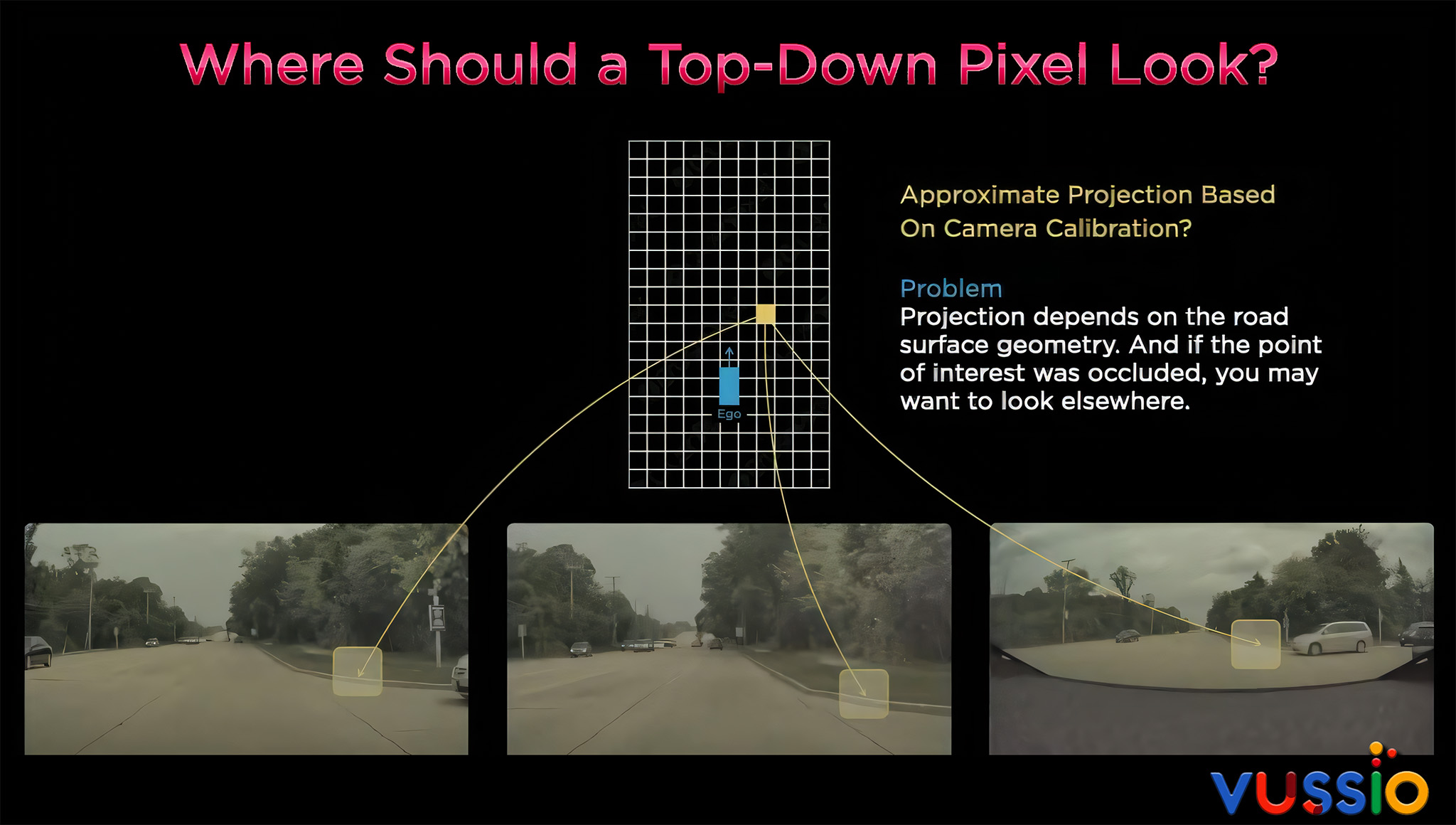

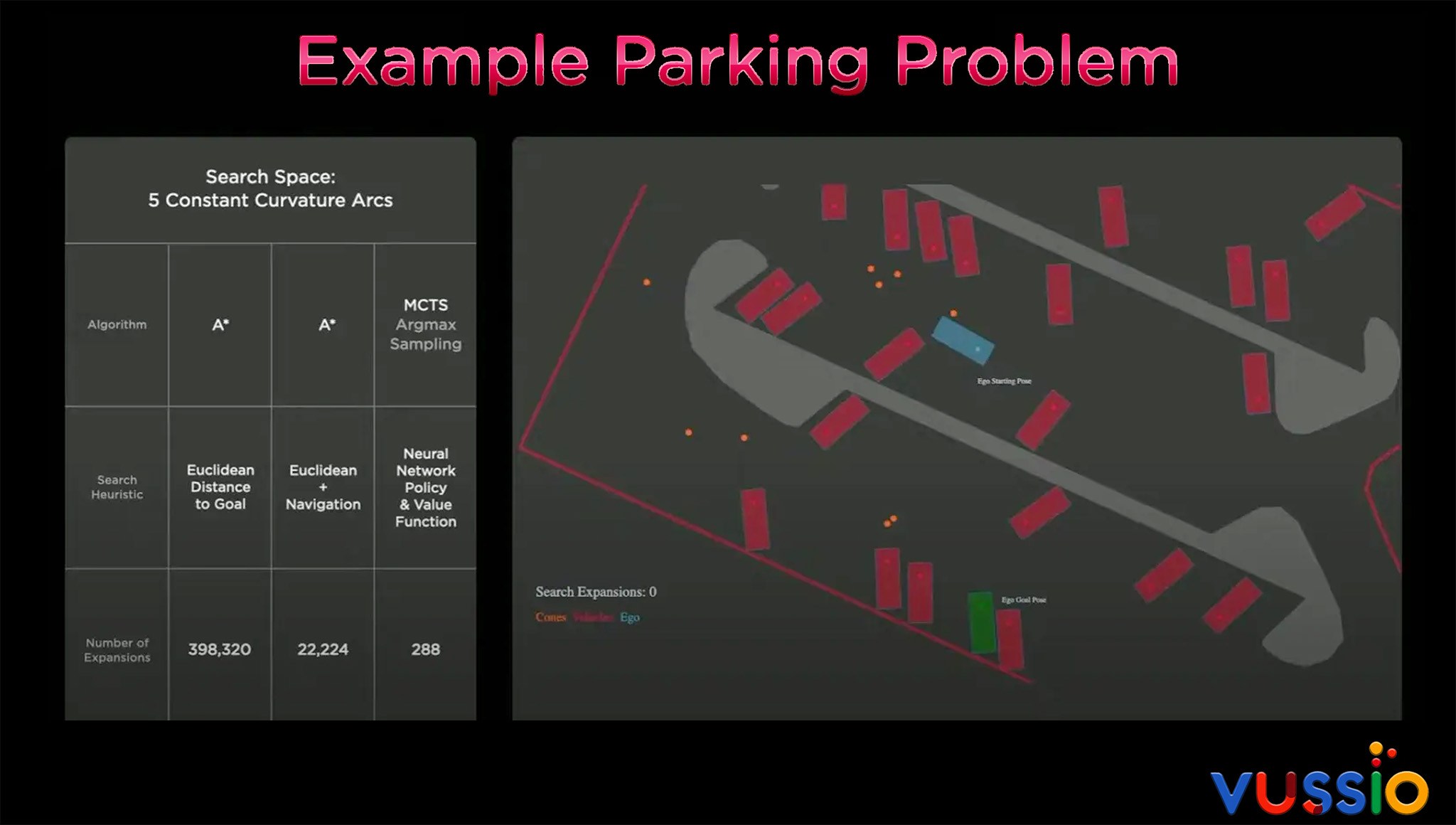

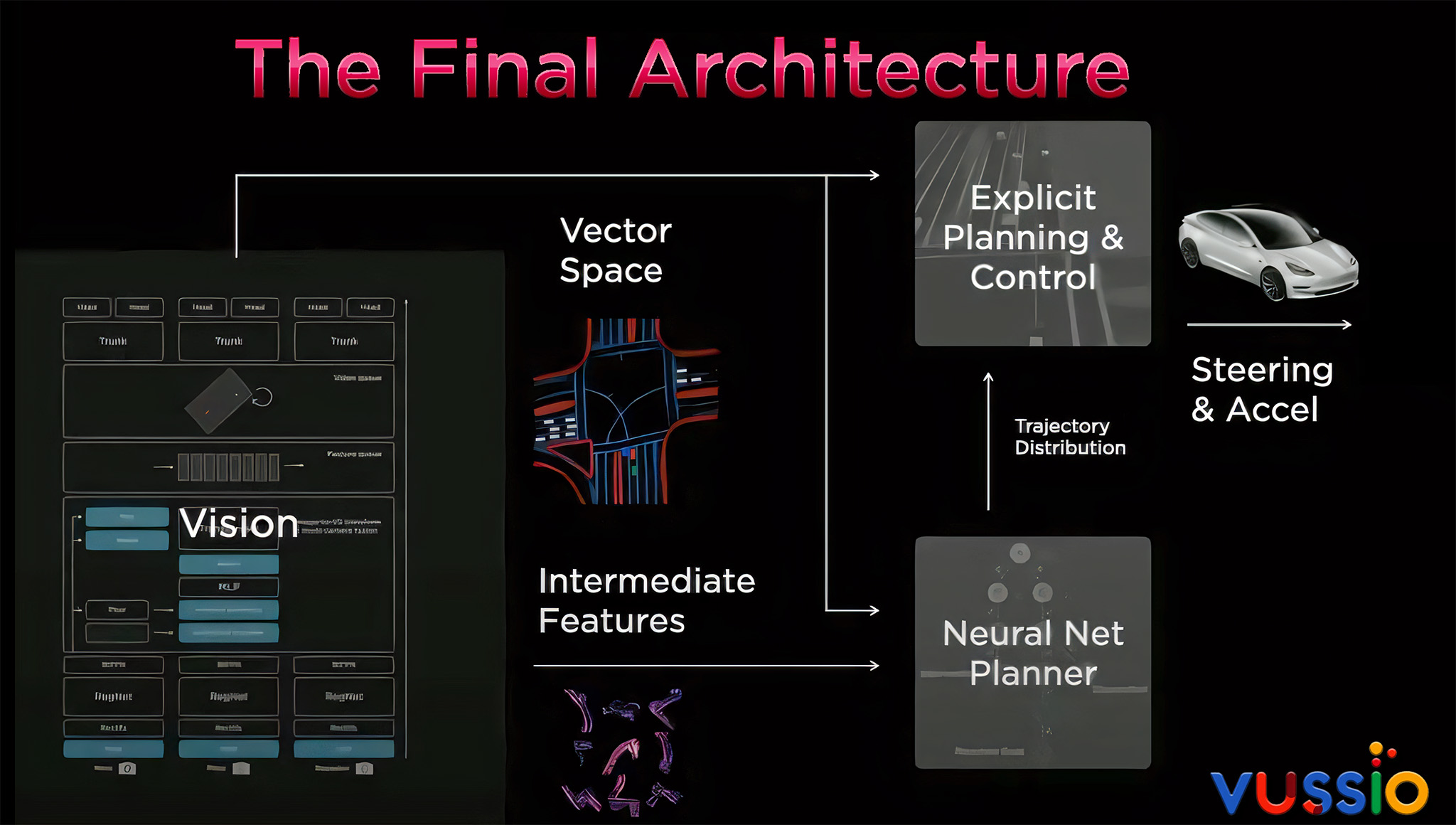

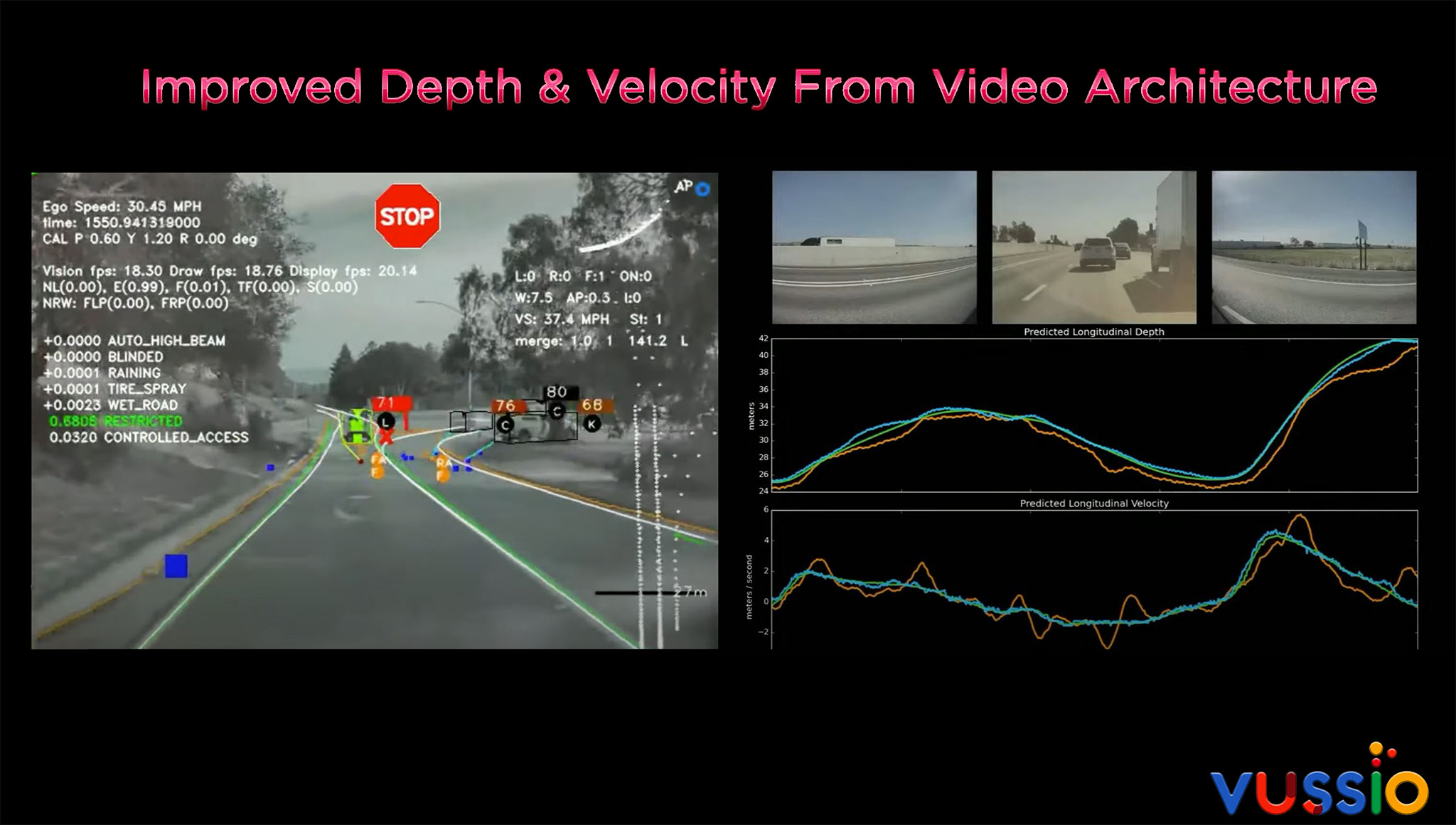

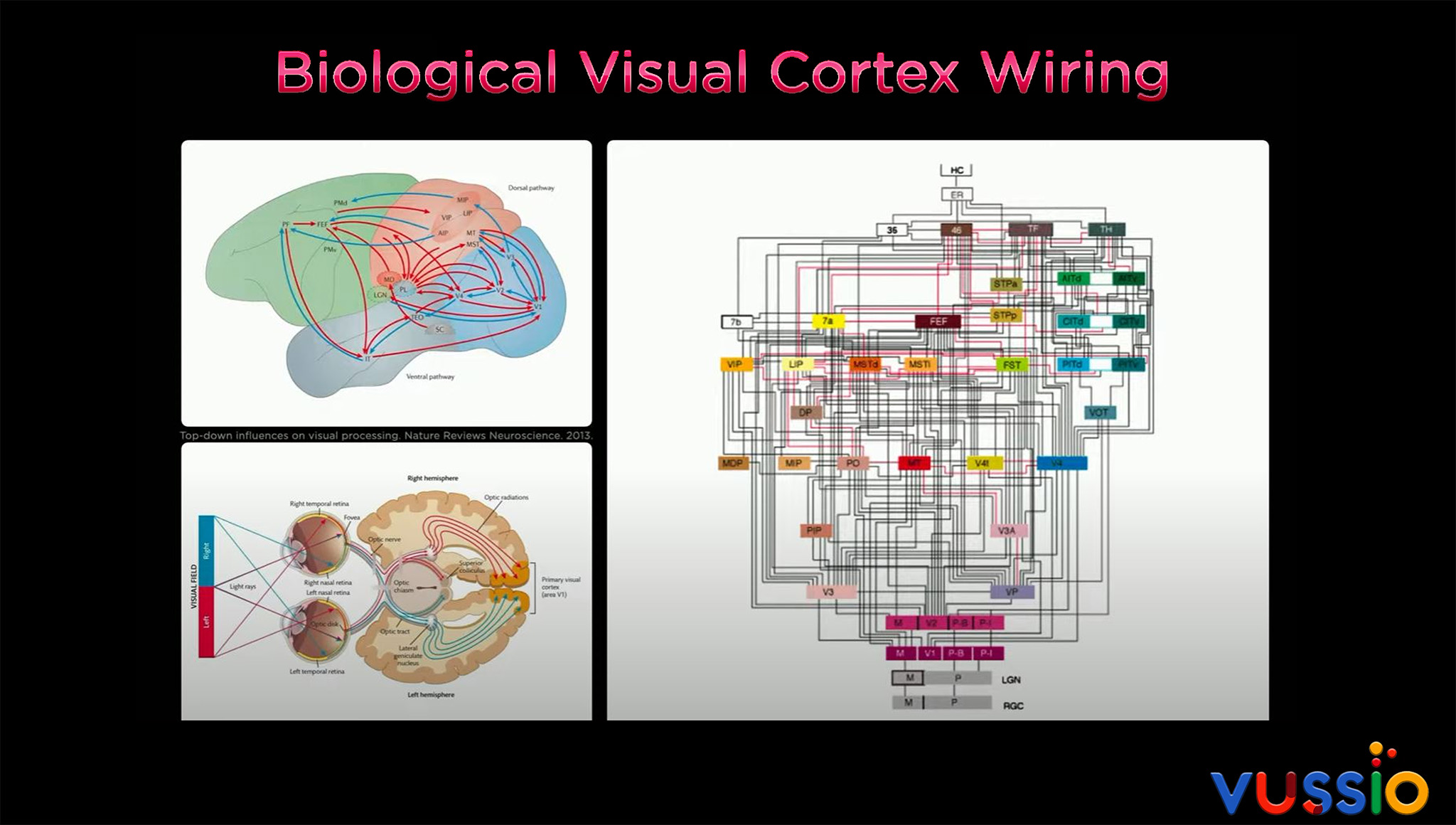

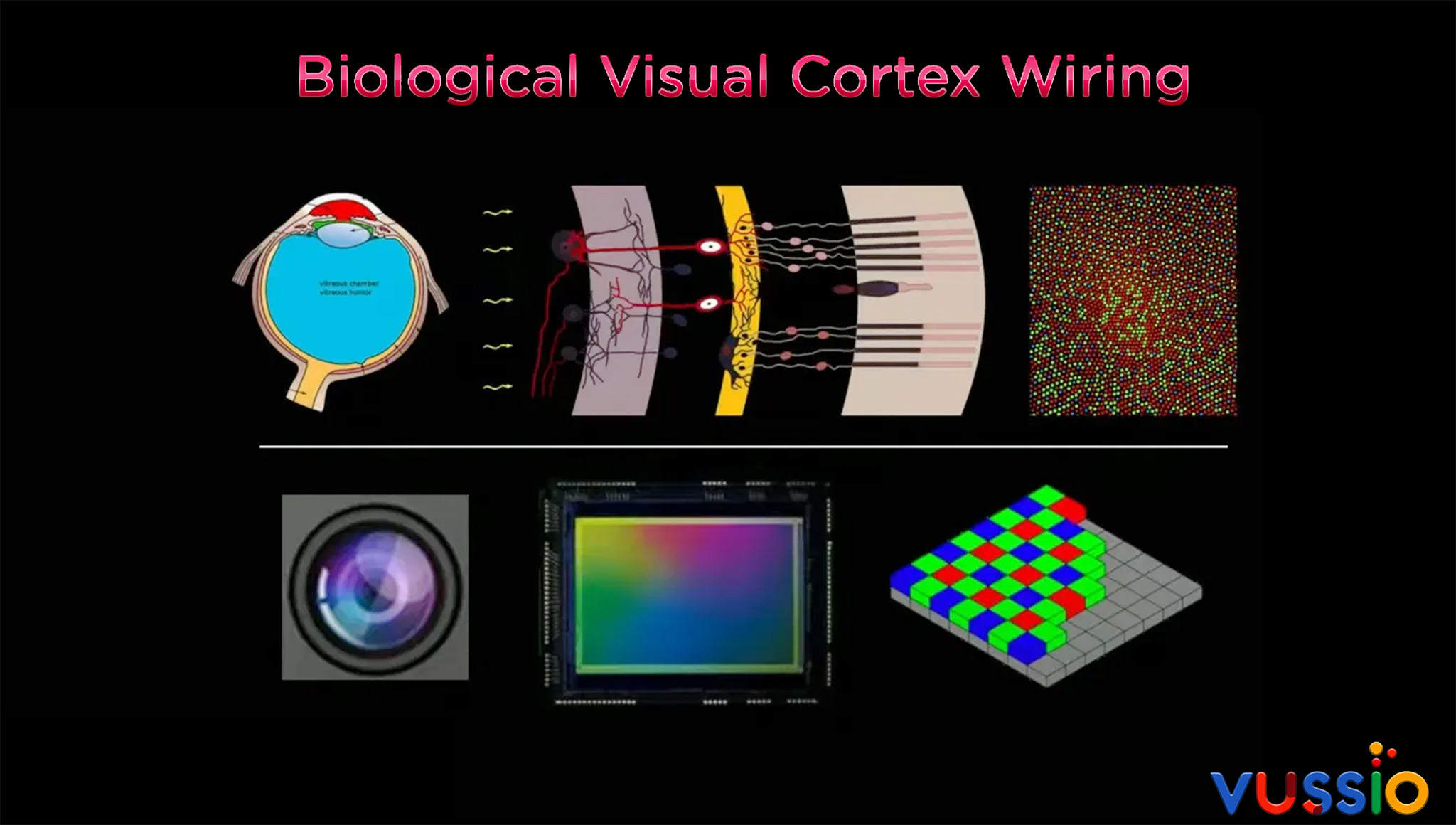

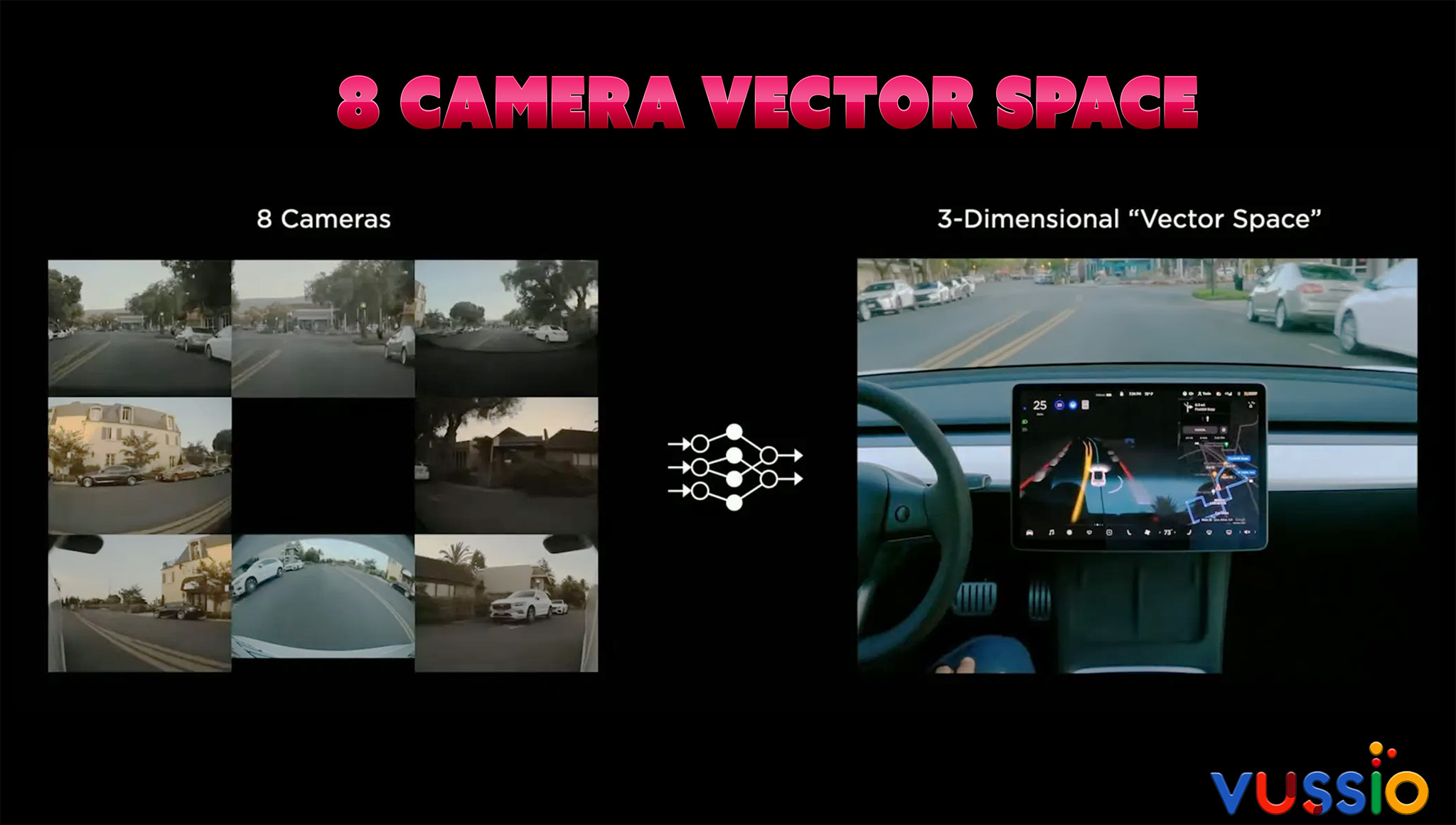

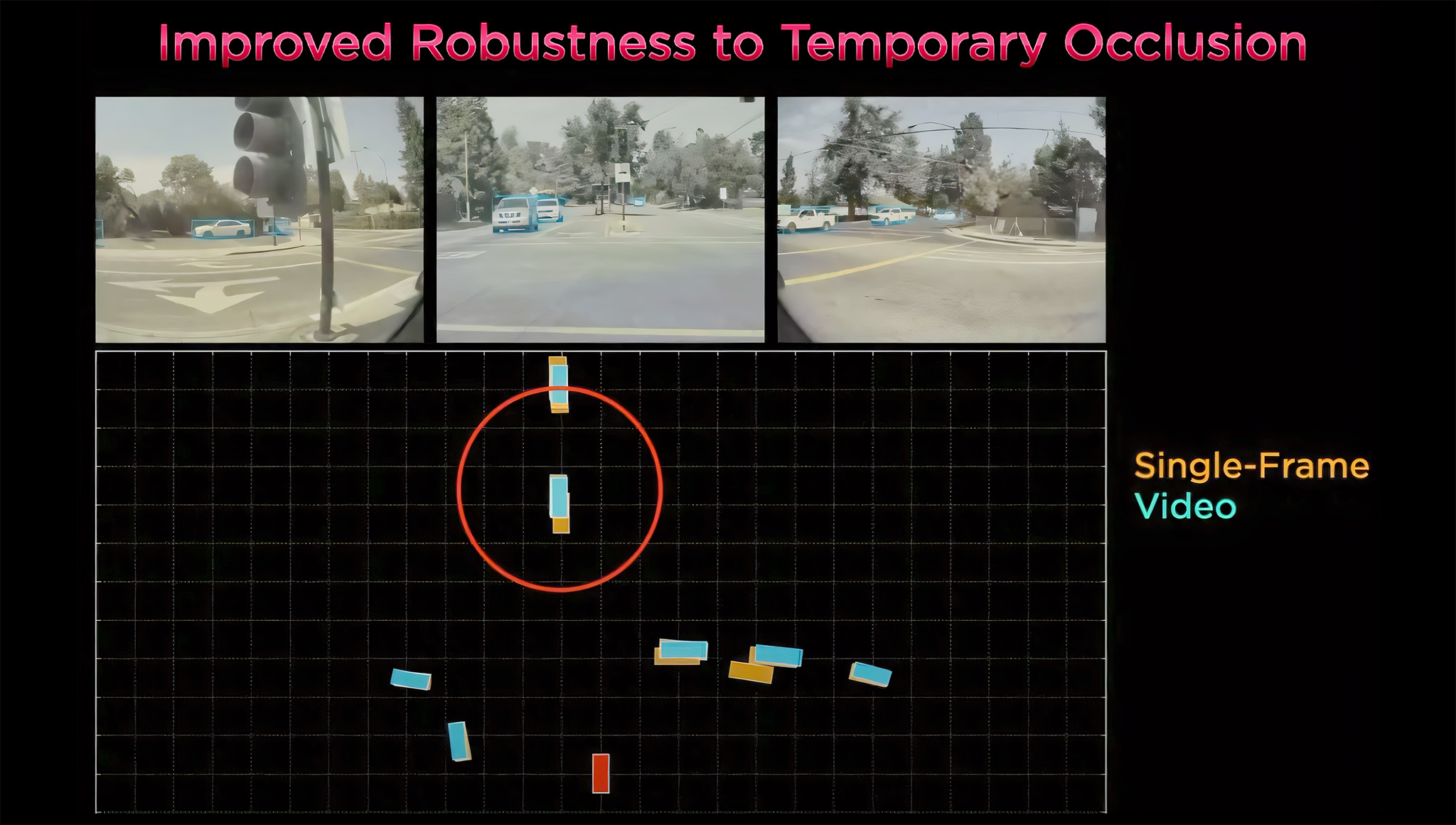

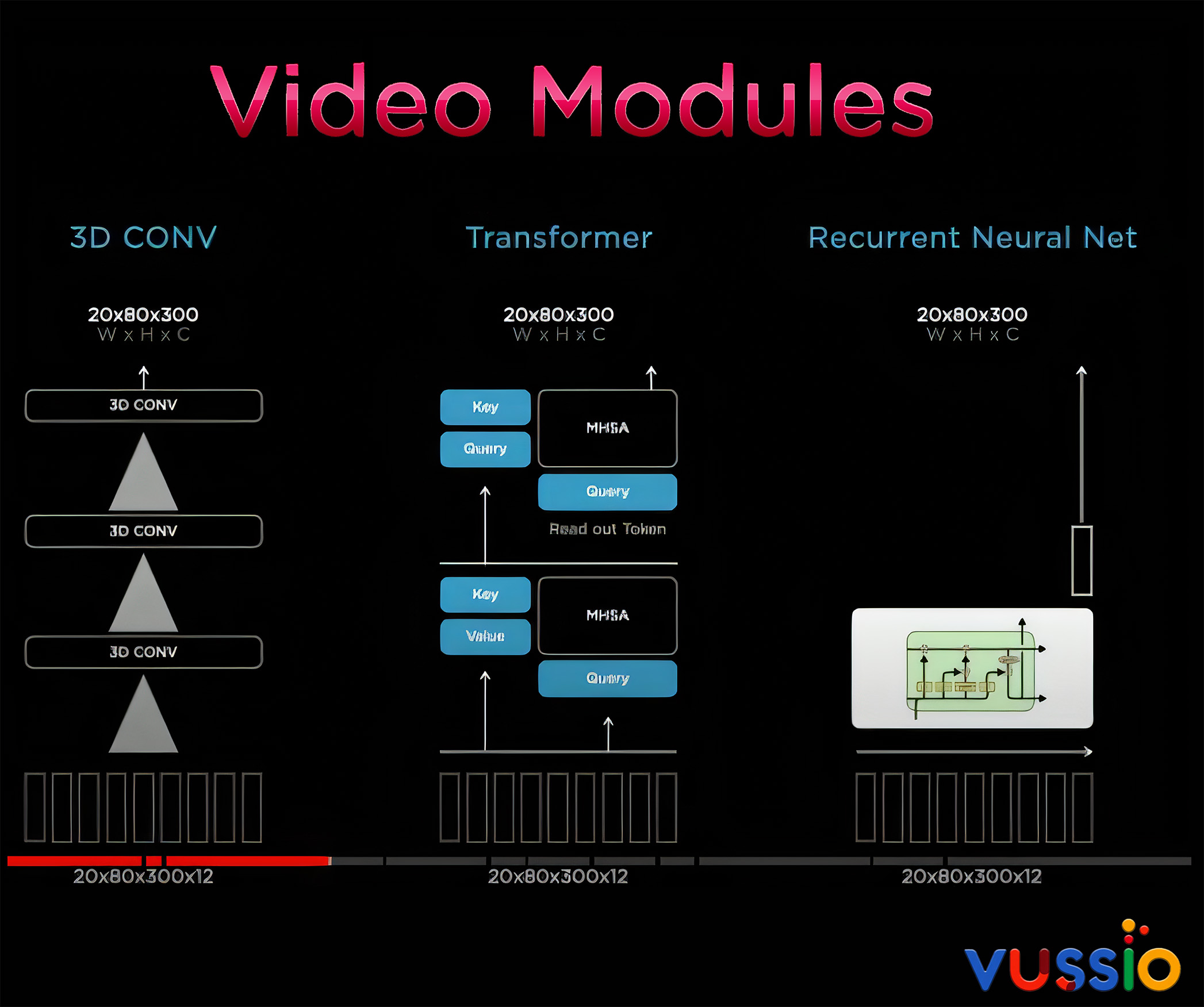

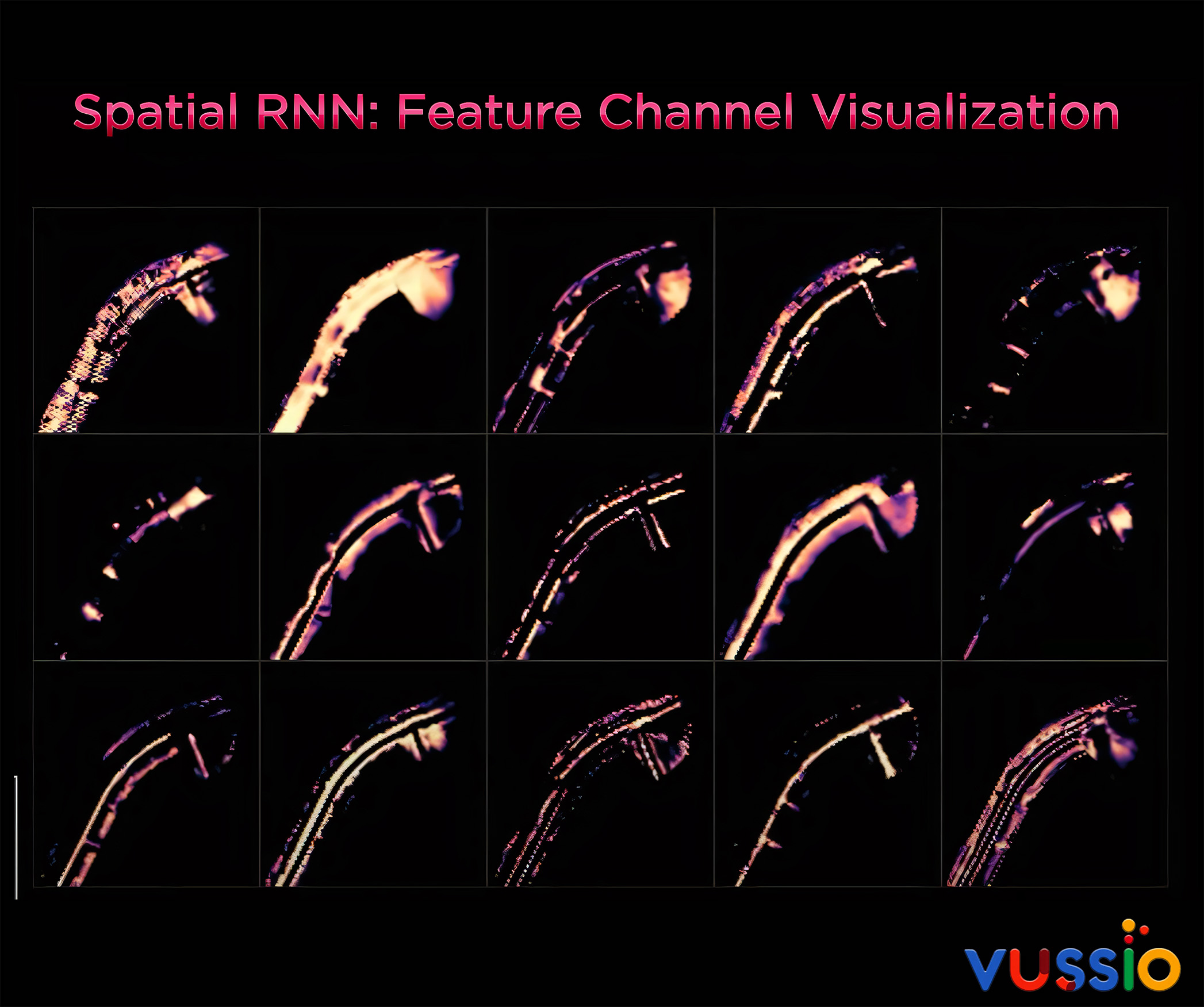

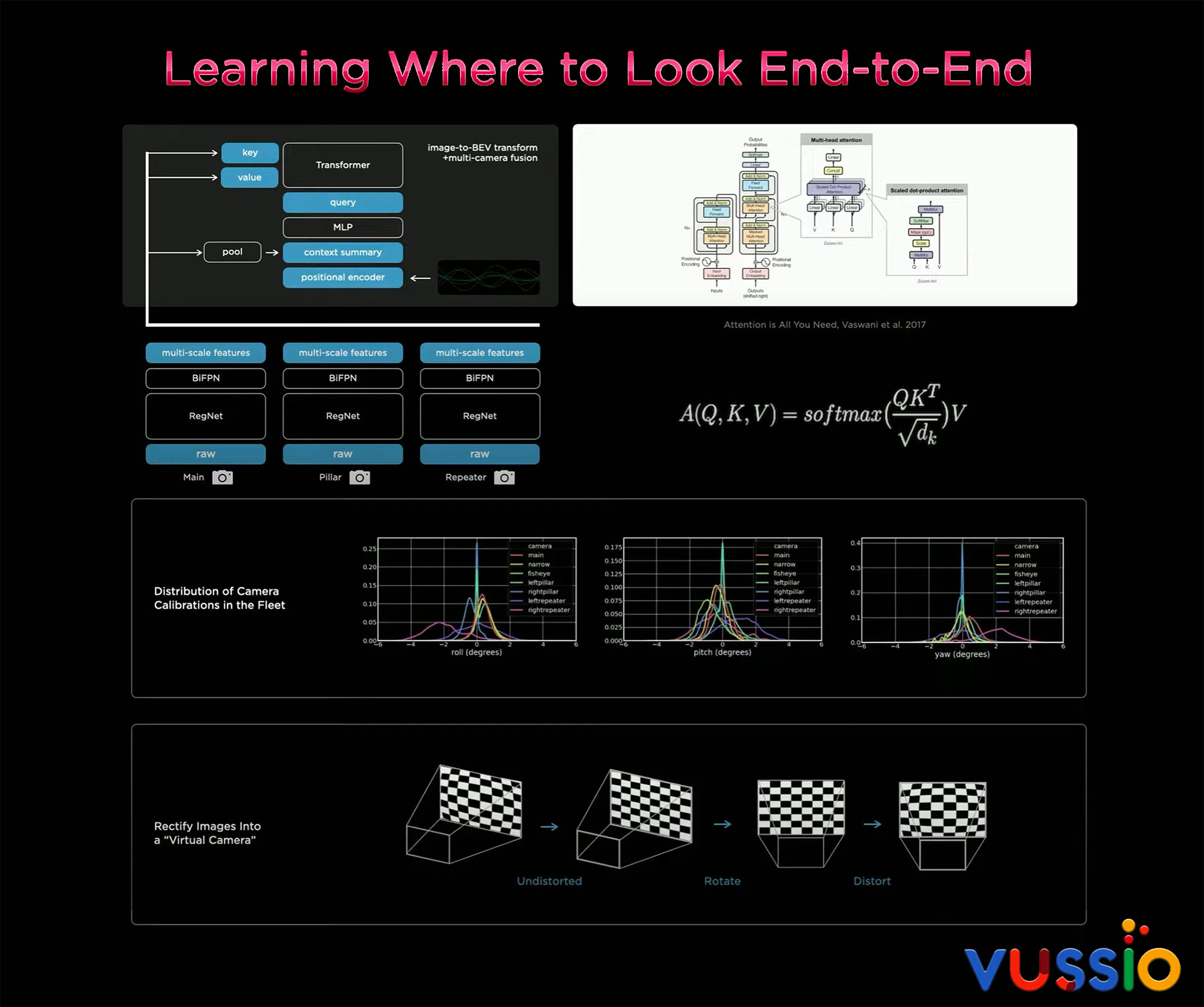

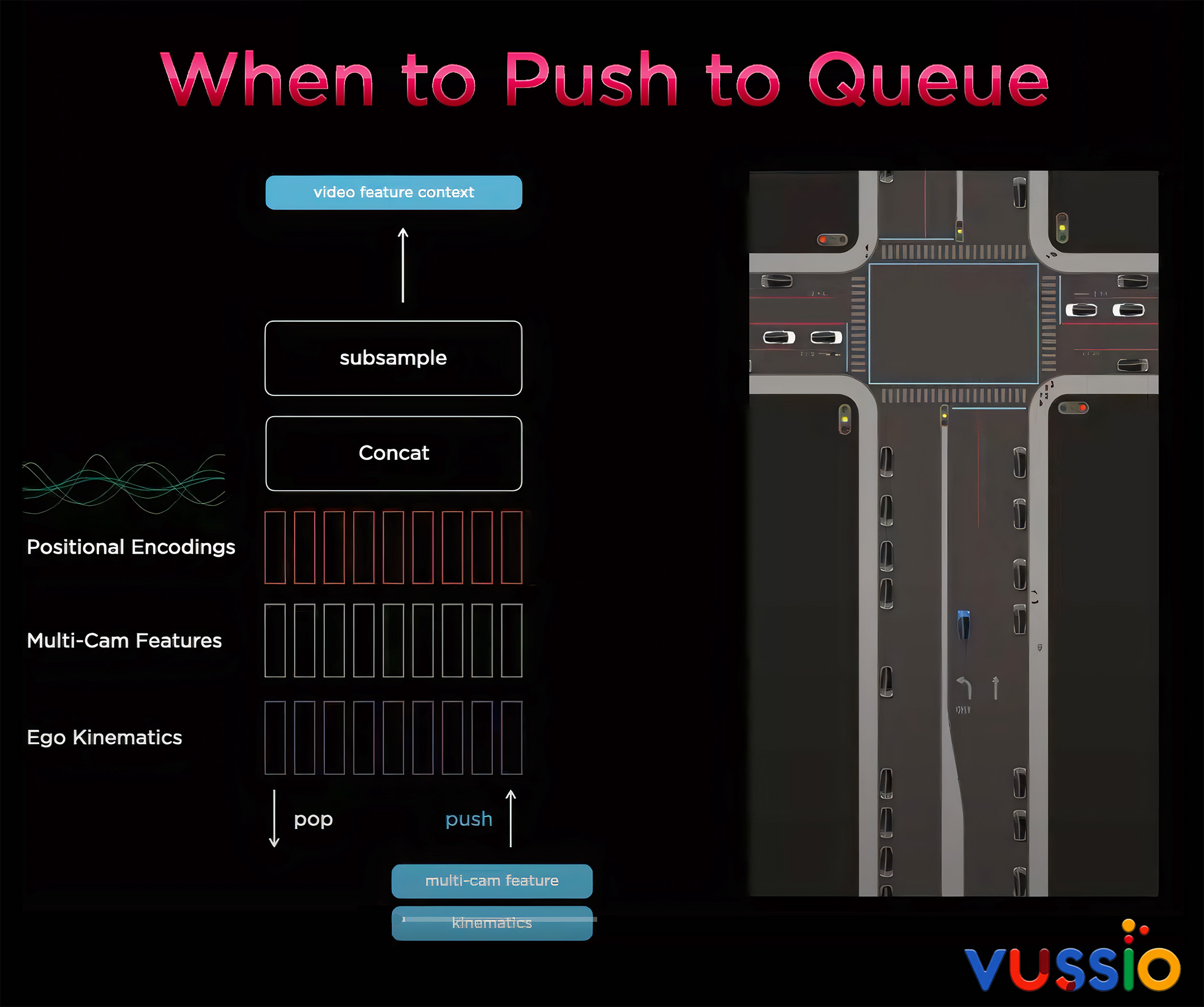

Tesla’s published materials and public commentary emphasize technical features like neural network architecture and real-time processing rather than explicit ethical choice mechanisms. No formal documentation statement from Tesla about their ethical framework (e.g., Utilitarianism, Deontology, or Virtue Ethics). However, in 2021 they held an AI summit and the video breaks down their technology in extreme detail. While it’s definitely worth watching, it’s a long one. You can browse through the key images I’ve pulled from it instead.

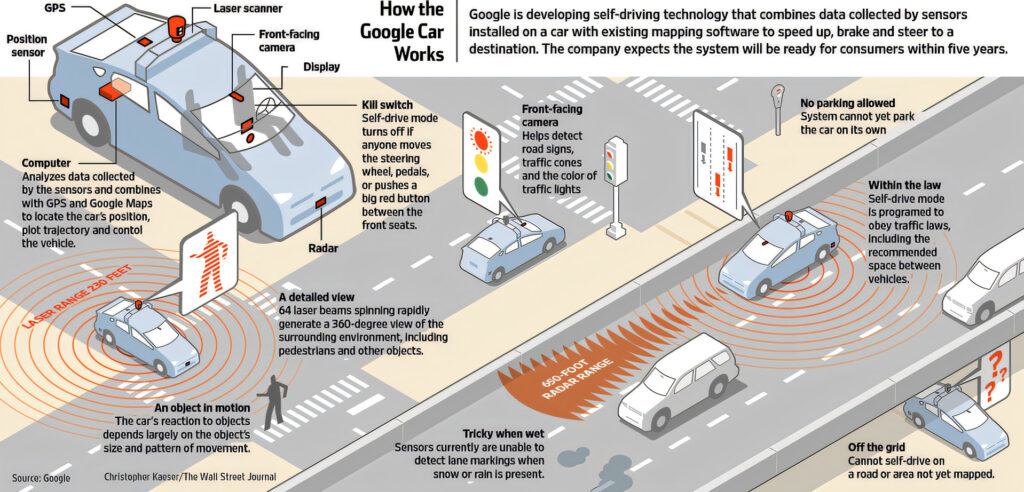

Compliance With Traffic Laws and Regulations

Tesla's Full Self-Driving (FSD) software is coded to comply with speed limits, signs, signals, and right-of-way rules. In many cases, this compliance reduces the number of potential dilemmas (e.g., if a car stops at a red light, it avoids the need to "choose" between hitting one group of pedestrians or another).

Collision Avoidance as a Priority

The system is principally engineered to prevent accidents while activating emergency braking or evasive maneuvers when it perceives likely collisions. The predominant reasoning here is to avoid all crashes, rather than stopping to consider how many human lives might be endangered in a given scenario.

Layered Safety and Override Mechanisms

Currently, the human driver can take control of the situation at any moment, and Tesla, as well as the law, expects them to do it if the software makes an uncertain or risky decision. The system is designed around this scenario, and it sets up a shared responsibility model for the AI and human drivers.

Why No Overt “Ethical Framework” Labeling?

Focus on Engineering, Not Philosophy: The published materials and public comments of Tesla emphasize features of technical engineering, such as neural network architecture and real-time processing, rather than focus on ethical choice mechanisms or public relations concerns.

Responsibilities & Oversight

Auto-manufacturers and regulators usually emphasize adherence to safety regulations and traffic laws. They recognize, in principle, that there might be ethical "trolley problems" to resolve, but they don't require a self-driving car to take a particular philosophical stance or to say what kind of ethical decisions the car might make when faced with one of those problems.

The ethical dilemmas posed by self-driving cars represent our profound reflections of the moral and philosophical challenges we’ve wrestled with humanity since the birth of consciousness. Consider this: we are delegating decisions of life and death not to individuals but to the logic of algorithms. In doing so, we are outsourcing the responsibility of moral reasoning to something incapable of experiencing existential weight of such decisions. This raises many questions: is it beneficial to relay on an algorithm over emotion? What does it say about human-kind that we are willing to abdicate these responsibilities?

Theoretically, AI could eliminate the errors that stem from human frailty such as distraction, intoxication, or fatigue. What moral framework will its artificial decision making choose? These are questions reminiscent of the ancient ethical puzzles debated by Aristotle and Kant, but recast in the cold clinical precision of machine logic.

But beyond the practical and regulatory challenges lies a deeper, more unsettling question: are we prepared, as a society, to confront the ethical mirror these machines hold up to us? Promethean fire, offering both illumination and the risk of immense destruction, depending on how they are managed. So as we stand on the threshold of this new frontier, these machines will not only transform how we move through space but also compel us to navigate the labyrinth of our own moral imagination. As we grapple with the implications of these technological advancements, we must also reflect on the values that guide our interactions with them. In this context, the narratives of human ingenuity and the consequences of our actions echo through history, much like the tales of Jesus Christ’s miraculous acts explored in various texts. The challenge lies in ensuring that, amidst the allure of progress, we prioritize compassion and responsibility, forging a path that uplifts both individuals and the collective conscience of society. As we embark on this journey into the unknown, we will inevitably encounter mysterious places around the world that challenge our understanding and expand our horizons. These intersections of technology and ethics reveal the intricate tapestry of our shared humanity, reminding us that our quest for knowledge must be balanced with humility and respect for the diverse experiences that shape our global community. Only by embracing these complexities can we hope to cultivate a future that honors the lessons of the past while fostering innovation that benefits all.

Fatal AI Self Driving Car Crashes

AI decision-making in life-and-death scenarios is far from straightforward. Although self-driving technology holds forth the promise of a safer future on the roads, the present reality has thrown a wrench into that vision. Tesla’s Autopilot system has been connected to at least 13 deadly wrecks where drivers misused the system in ways the company should have foreseen and done more to prevent. Below, you can explore the details of five fatal crashes and the legal actions taken in their aftermath.

1) Tesla Model 3 Fatal Crash (2019):

A Tesla Model 3 on Autopilot in Florida struck a semi-trailer that was crossing in front of it. The driver was killed. The car's sensors simply failed to "see" the trailer. They weren't helped by the fact that the trailer had no lights on the side that would be facing the Tesla. Also, the Autopilot and the driver together were not able to apply the brakes in time to prevent a collision.

Legal Consequence:

The NTSB criticized Tesla for not having effective monitoring systems for its drivers. A lawsuit filed against Tesla by the victim's family has resulted in ongoing legal proceedings and quite a bit of drama in the courtroom. In a way, this incident has also increased both regulatory and public pressure on Tesla to better address the limitations of its Autopilot system.

2) Uber Self-Driving Car Accident (2018):

An Uber autonomous test vehicle struck and killed a pedestrian in Arizona. At night, the pedestrian was crossing the street. The vehicle's sensors detected the pedestrian but failed to classify her as a human, which delayed the braking response. This incident raised a lot of questions about the safety protocols that Uber has in place when it tests its autonomous vehicles.

Legal Consequence:

The family of the victim settled with Uber so the ride-hailing company could avoid a prolonged court fight over liability for the fatal crash. Meanwhile, Arizona zeroed in on Uber's testing, yanking permission to conduct a public safety experiment, then issuing a corrective slap as it allowed permission again.

3) Tesla Autopilot Fatality (2016):

In Florida, a crossing tractor-trailer proved too much for a Tesla Model S operating on Autopilot to handle. On the morning of May 7, 2016, a bright sun was shining in a clear sky when the Model S approached a highway interchange. It was a crossing truck that was next in line for the crash to happen to it. The car's sensors, essentially a basic camera and radar system, did not see either the white side of the rig or the fact that it was standing in the way of the Tesla's path. The Model S just passed under it at 68 mph, according to the NTSB.

Legal Consequence:

The NHTSA determined that Tesla's Autopilot was not defective but stressed that the need for clearer communication about how attentive drivers must be when using the system. Tesla faced no penalties, but its reputation took a hit, which seems to have prompted it to institute new safety measures and warnings in the user interface.

4) Waymo Collision with Motorcycle (2018):

In Mountain View, California, a Waymo self-driving minivan, in the autonomous driving mode with a safety driver aboard, swerved to avoid a car that was veering into its lane and collided with a motorcycle. The motorcyclist was injured. The incident illustrates the kinds of decisions that autonomous systems must make in complex situations that require split-second judgments.

Legal Consequence:

Despite the absence of any legal accusations, the occurrence led Waymo to make a public commitment to enhance its algorithms. Safety advocates and regulators kept a close eye on the company and this incident in particular, and their interest undoubtedly affected the updates that followed and the protocols that were revised after the fact.

5) Tesla Autopilot Crash into Parked Firetruck (2018):

A fire truck parked on a California freeway was in the path of a Tesla Model S on Autopilot. The vehicle, traveling at roughly 60 mph, struck the fire truck. The driver of the Tesla suffered minor injuries. Audiovisuals in the Model S could not detect the large, stationary emergency vehicle and did not warn the driver in advance.

Legal Consequence:

The NTSB found Tesla's safeguards insufficient to ensure driver attention. It did not issue any fines or penalties but instead increased regulatory pressure on Tesla to be more clear in establishing guidelines for semi-autonomous systems and to be more effective in having drivers be attentive.

Wrapping it all Together

As we hand over control to machines, we must wrestle with the implications ceding moral responsibility to algorithms and eventually we’ll need to confront the ethical blind spots they reveal. These dilemmas aren’t theoretical and they force us to question who bears the burden of accountability when a system goes wrong. Without clear answers, we risk building a future where human lives are reduced to mere variables in a cold calculation. The question isn’t whether AI can make these decisions but whether we, as a society, can live with the decisions AI will inevitably make.

The true measure of progress isn’t just in the sophistication of the technology but in how deeply we consider its moral and societal consequences. If we don’t, we risk becoming passive participants in our own moral abdication, outsourcing not only control but our humanity.

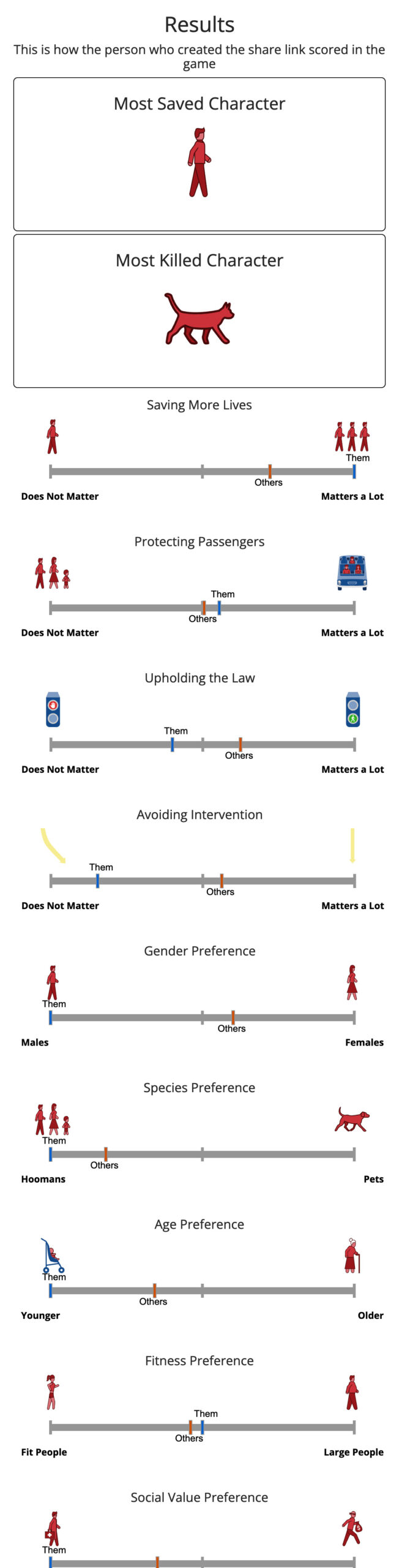

BONUS: MIT’s Moral Machine Test

Enter MIT’s Moral Machine. It’s a project that hands these moral dilemmas over to the public and tasks them with deciding whether the car should plow into a jaywalking teenager or an elderly woman who followed the rules of the crosswalk. What’s fascinating is comparing your choices to the average values of others who’ve also taken the test. TRY TAKING THE TEST!

QUESTION: Which Option Would You Choose

More from A.I.

Can You Tell If This Art Is Human or AI? Most People Can’t!

Once upon a time, art was the way humans flexed, a way to show off our emotions, imagination, and skill. …

AI’s Prison Takeover: Are We Creating a Orwellian Dystopia?

The modern world is almost fully integrated with artificial intelligence, and prisons are starting to benefit from AI innovations too. …

The Real-Life Simpsons: A Bold Reimagining Hits Theaters in 2025

https://www.youtube.com/watch?v=GUWxoP1pmis The upcoming 2025 release of The Real-Life Simpsons may be the most absurdly ambitious gamble Hollywood has taken to date. …