TL;DR: Artificial intelligence is transforming prisons with tools for surveillance, predictive analytics, communication monitoring, wearable tech, and rehabilitation. AI improves safety and efficiency, like detecting fights or escape plans, but raises ethical concerns about bias, false positives, and privacy erosion. While these technologies may enhance inmate management and rehabilitation, critics argue they risk creating a dystopian surveillance state. Balancing control with compassion and accountability is crucial to prevent misuse of this powerful technology.

The modern world is almost fully integrated with artificial intelligence, and prisons are starting to benefit from AI innovations too. What were once the stone and steel bastions of human oversight have begun to see the “unblinking eyes and tireless ears” of AI. It’s a big jump from the old pen-and-paper surveillance to today’s buzzword-laden promise of “smart prisons”. Is a smarter prison better for genuine justice, or is it just another step on the road to a high-tech dystopia?

How AI is Taking Over Prisons

Beginning with the facts: Incarcerated individuals should know something is up when inmates start getting smart. As a result of the variety of purposes for which artificial intelligence is currently being employed, from monitoring inmate behavior to analyzing communications and even predicting crimes. The employing of AI in correctional facilities have proven to be highly beneficial, making such facilities safer and more efficient. However, critics claim that the mini-dystopian exercise being created in U.S. prisons may ultimately pose serious constitutional concerns.

1. AI-Powered Surveillance

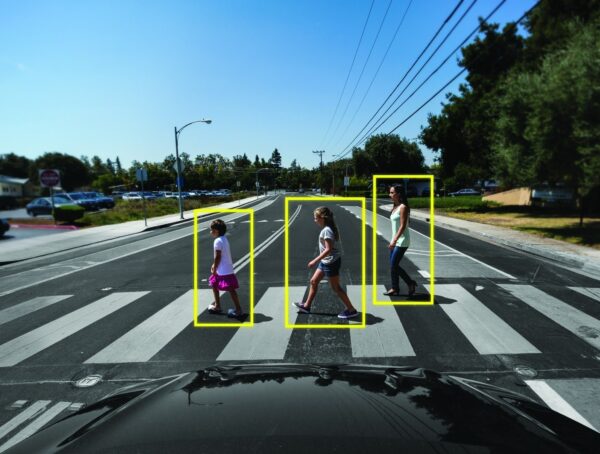

Envision a universe in which an all-knowing AI super-system watches every move, gesture, and facial expression.Smart cameras equipped with AI analyze live video feeds to detect unusual behavior. Fights, self-harm, or unauthorized gatherings can trigger immediate alerts to correctional staff. (Equivant Corrections)

2. Predictive Analytics: Thought Crime, Anyone?

Now, let’s look at predictive analytics. These systems use historical data on inmate behavior and claim to predict future incidents, like fights and escape attempts. The reasoning is simple: If you can predict it, you can prevent it, right? But let’s pause and consider this. If an algorithm flags an inmate as likely to commit an infraction, what does that mean for the level of scrutiny or restricted privileges they will encounter? Are we’re punishing people not for what they’ve done but for what they might do? The UK looks at this technology and says it could help prevent self-harm and inmate-on-inmate violence. (The Times)

3. Monitoring Communication

Prisons’ most contentious AI application is monitoring inmate communications. Prisons use this tool to read and listen to what inmates are saying. Suffolk County Jail in New York uses AI to analyze phone calls made by inmates. They scan 600,000 minutes of calls. And they’re not alone. This happens across the U.S. And when I say analyze, I mean these phone calls are scanned by an algorithm to look for anything suspicious such as a drug deal or escape plan that might be in the works. (ABA Journal)

More and more lately, it seems that discussions around schemes and plans are increasingly using two words that you’d do well to remember: “false positives.” When false positives occur, what are they going to do to that inmate? Could he face harsher scrutiny, segregation, or even isolation? These aren’t just technical glitches; they’re real consequences that impact human lives.

4. Wearable Devices: Surveillance on the Skin

Some correctional facilities are trying out wearable technology that follows inmates’ vital signs and whereabouts. These wristbands can keep tabs on one’s heart rate, sense when someone is under stress, and guarantee that inmates are where they’re supposed to be.

It’s technology that could save lives, especially in the case of emergencies. But wearables also raise an unnerving issue concerning bodily autonomy. If this technology is misused or mishandled, it could strip inmates of the last vestiges of privacy they have, reducing them to little more than walking metrics in a system designed to control every aspect of their existence. Moreover, the implications of such surveillance extend beyond the prison system and echo in broader conversations about personal freedoms in a tech-driven society. Just as selfdriving car ethical dilemmas challenge our understanding of responsibility and choice, so too do these wearables force us to confront the boundaries between safety and individual rights. As we navigate these advancements, we must weigh the benefits against the psychological toll of being monitored, ensuring that technology serves to empower rather than imprison.

5. AI in Rehabilitation

Not all applications of artificial intelligence in correctional facilities are aimed at controlling the population. Some are meant to help. Virtual reality programs powered by AI are used to teach inmates life skills and provide therapy. Picture a convict wearing a VR headset and practicing for a job interview or resolving a personal conflict. That’s what you call thinking outside the cell to diminish the number of inmates who return to prison. But can an algorithm make sense of human change? (MIT Technology Review)

The Ethical Dilemma: Control vs. Compassion

We must confront some deep ethical questions if we’re going to put AI in prisons. Are these tools really going to make prisons safer and help rehabilitate prisoners, or are they just going to make our prisons even more like the dreaded Orwellian surveillance state? The power dynamics in prisons are already skewed enough. And many prisons already skirt human rights. If we then add AI, with its already-known potential for societal bias, into that already precarious mix, are we really making prisons better?

What Does This Mean for Society?

The debate over AI monitoring boils down to a tug-of-war between effectiveness and control, and let’s be honest, it’s messy. On one hand, AI monitoring promises unparalleled precision in evaluating behavior, performance, and compliance. It doesn’t blink, it doesn’t take coffee breaks, and it doesn’t care about personal vendettas. This means it can keep everything running like a well-oiled machine, catching inefficiencies and slip-ups faster than any human ever could. But the catch? That very efficiency opens a Pandora’s box of control issues. When every click, keystroke, or conversation becomes data, it’s not just about optimizing productivity; it also becomes about eroding privacy. Suddenly, workplaces and institutions start to feel less like professional environments and more like dystopian surveillance states. As organizations grapple with this dilemma, the ethical implications of AI monitoring become increasingly pronounced. Beyond just tracking productivity, the tension between surveillance and autonomy raises questions about trust and the human experience in the workplace. In navigating these complexities, one is often reminded of Turing’s Test and modern AI, as we consider whether machines can truly understand the nuances of human behavior or simply mirror them through relentless data analysis. The fundamental challenge lies in balancing the benefits of AI’s capabilities with the essential need for human dignity and privacy.

But here’s the twist: this same technology is a game-changer for environments where control and monitoring are essential. like with prisons. AI could revolutionize inmate management, improving safety, predicting violence, and ensuring compliance in ways that would have been impossible with human oversight alone. It’s remarkable technology with enormous promise, but let’s not sugarcoat it. What thrives in prisons today could creep into society tomorrow. We’re already living alongside this technology, marveling at its potential while tiptoeing around its dangers. If handled poorly, the promising aspects could give way to something far more sinister. The ethical dilemma isn’t just about control versus effectiveness; it’s about steering this powerful tool toward accountability without letting it pave the way for a surveillance-dominated future.

Flip Through CDPPS Cyprus 2019 Official Document

More from A.I.

Can You Tell If This Art Is Human or AI? Most People Can’t!

Once upon a time, art was the way humans flexed, a way to show off our emotions, imagination, and skill. …

Would a Self-Driving Car Kill You to Save Three Strangers? The Terrifying Truth

Picture this: you go to cross the street, walking about 10 feet behind a group of 3 friends. A self-driving …

The Real-Life Simpsons: A Bold Reimagining Hits Theaters in 2025

https://www.youtube.com/watch?v=GUWxoP1pmis The upcoming 2025 release of The Real-Life Simpsons may be the most absurdly ambitious gamble Hollywood has taken to date. …